Stanford CRFM collaborates with Arabic.AI to create a new evaluation platform focused on Arabic large language models. The collaboration resulted in HELM Arabic, a public leaderboard designed to measure model performance using standardized Arabic benchmarks. The project was developed by Stanford University’s Center for Research on Foundation Models (CRFM) together with Arabic.AI. The platform extends the existing HELM evaluation framework to Arabic language tasks.

Stanford CRFM Collaborates with Arabic.AI on HELM Arabic

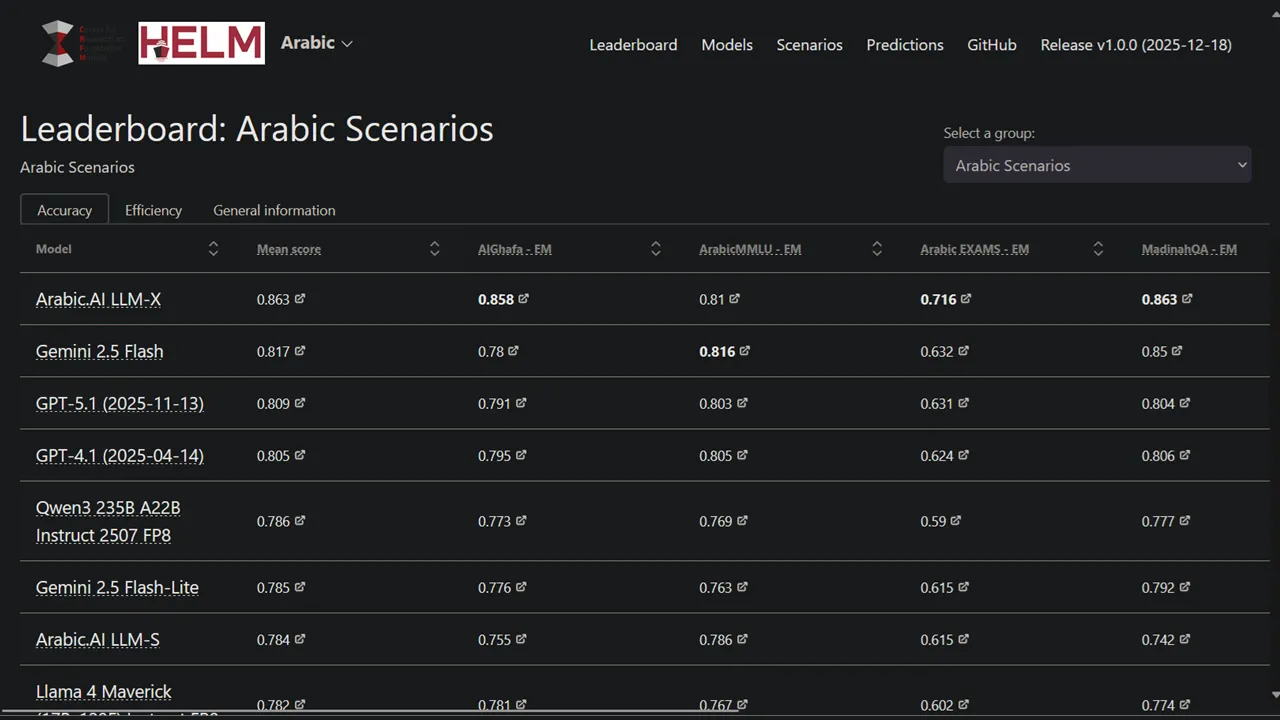

The HELM Arabic leaderboard evaluates models across seven Arabic-language tasks. These tasks include AlGhafa, ArabicMMLU, Arabic EXAMS, MadinahQA, AraTrust, ALRAGE, and ArbMMLU-HT. Each benchmark measures different language capabilities. These include multiple-choice reasoning, question answering, grammar understanding, safety evaluation, and academic knowledge. The benchmarks are drawn from established Arabic datasets.

Evaluation Methodology Used by Stanford CRFM and Arabic.AI

Stanford CRFM collaborates with Arabic.AI using a standardized evaluation process. The system applies zero-shot prompting for instruction-tuned models. Multiple-choice tasks use Arabic letter options rather than Latin characters. The evaluation samples 1,000 examples per task subset to balance dataset distributions. Optional reasoning features are disabled to maintain consistency across models. The leaderboard records full model prompts and outputs to support reproducibility.

Model Rankings and Benchmark Results

In the initial HELM Arabic results, Arabic.AI LLM-X (Pronoia) achieved the highest overall score across all seven tasks. Among open-weights models, Qwen3 235B ranked highest by mean score. Other open-weights models appearing in the top ten include Llama 4 Maverick, Qwen3-Next 80B, and DeepSeek v3.1. Several Arabic-focused models, such as AceGPT-v2, ALLaM, JAIS, and SILMA, were evaluated but did not rank above leading multilingual models.

Purpose of the HELM Arabic Platform

Stanford CRFM collaborates with Arabic.AI to address gaps in Arabic model evaluation infrastructure. HELM Arabic provides a transparent system for comparing both proprietary and open models. The platform allows researchers to replicate results and track progress in Arabic language modeling using consistent benchmarks.

Source: https://www.middleeastainews.com/p/stanford-crfm-collabs-with-arabic