Meta’s AI research team is getting closer to decoding human thoughts. The company, in collaboration with the Basque Center on Cognition, Brain, and Language, has developed an AI model capable of reconstructing sentences from brain activity with up to 80% accuracy.

The research relies on a non-invasive brain recording method and, according to the company, could pave the way for technology that helps people who have lost the ability to speak.

How It Works

Unlike existing brain-computer interfaces that often require invasive implants, Meta’s approach uses magnetoencephalography (MEG) and electroencephalography (EEG).

These techniques measure brain activity without surgery. The AI model was trained on brain recordings from 35 volunteers as they typed sentences. When tested on new sentences, Meta claims it can accurately predict up to 80% of the characters typed using MEG data—at least twice as effective as EEG-based decoding.

This method still has limitations. MEG requires a magnetically shielded room, and participants must remain still for accurate readings. The technology has also only been tested on healthy individuals, so its effectiveness for those with brain injuries remains uncertain.

AI Is Also Mapping How We Form Words

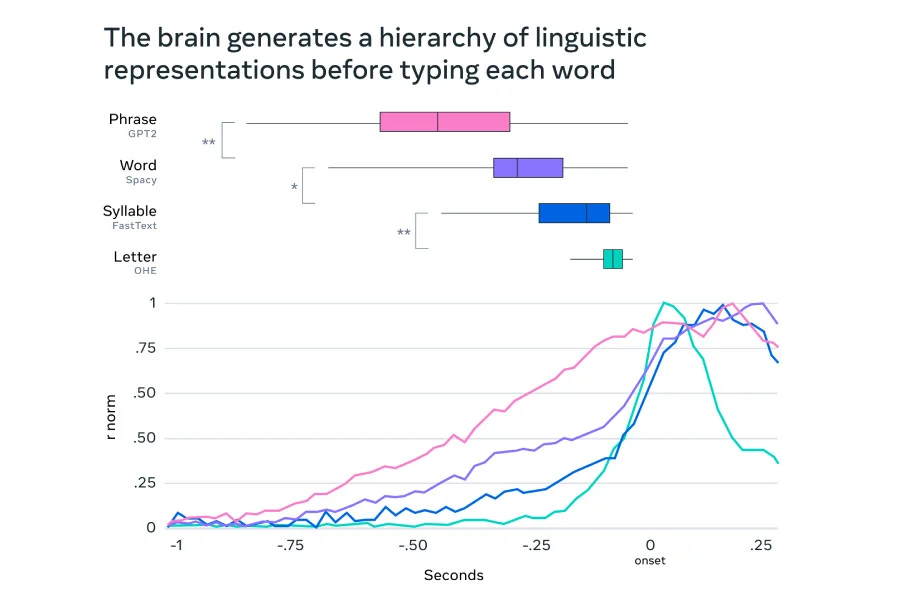

Beyond decoding thoughts into text, Meta’s AI is also helping researchers understand how the brain turns ideas into language. The AI model analyzes MEG recordings, tracking brain activity at a millisecond level. It reveals how the brain transforms abstract thoughts into words, syllables, and even individual finger movements when typing.

A key finding is that the brain uses a ‘dynamic neural code’—a mechanism that chains together different stages of language formation while keeping past information accessible. This could explain how people seamlessly structure sentences while speaking or typing.

Meta’s research reaffirms that AI could one day enable non-invasive brain-computer interfaces for people who can’t communicate verbally. But for now, the technology isn’t ready for real-world use. Decoding accuracy needs improvement, and MEG’s hardware limitations make it impractical outside lab settings.

Meta is investing in partnerships to advance this research. The company has announced a $2.2 million donation to the Rothschild Foundation Hospital to support ongoing studies. It’s also working with institutions like NeuroSpin, Inria, and CNRS in Europe.

Read more at: https://www.gizmochina.com/2025/02/16/metas-ai-can-now-read-your-mind-with-80-accuracy/