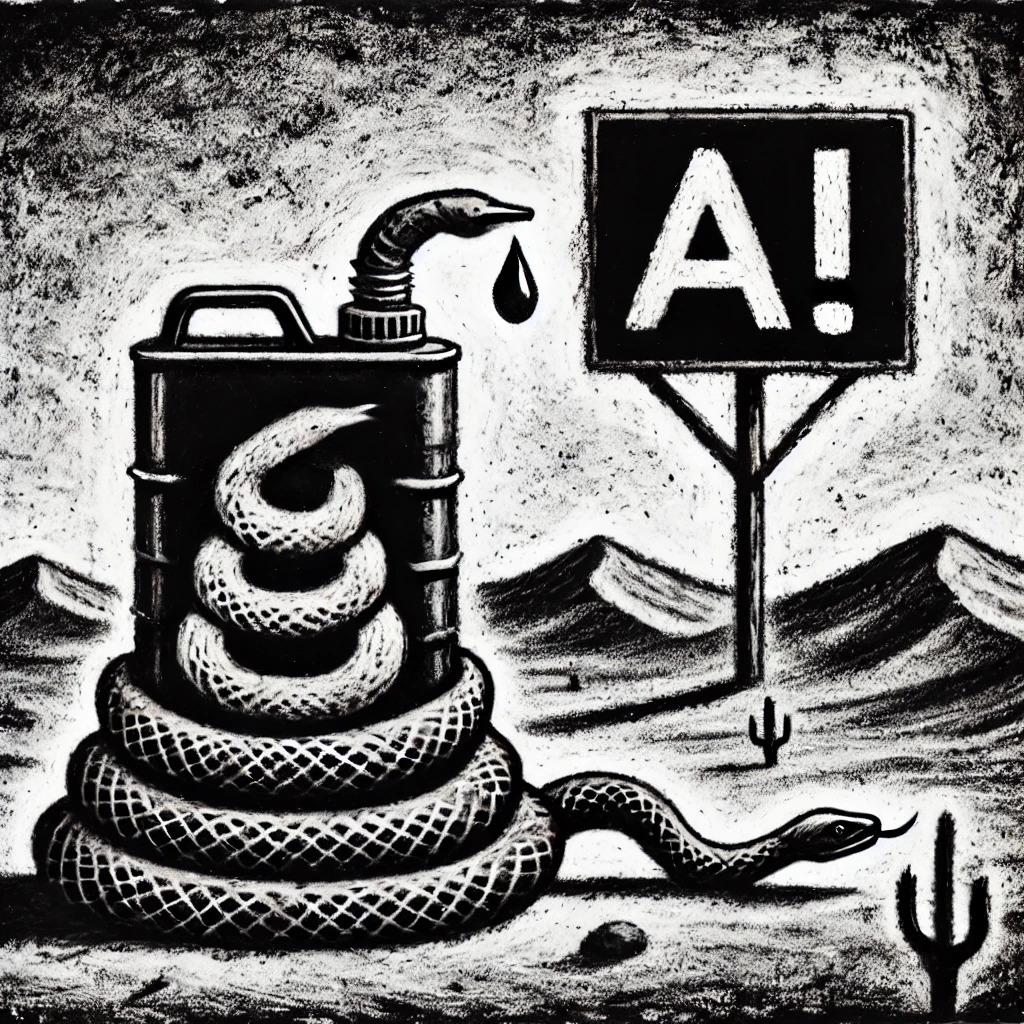

The buzz around generative artificial intelligence (AI) feels unavoidable, with companies and media outlets touting AI’s seemingly endless potential. However, two Princeton researchers argue that this relentless hype is clouding public understanding of the technology’s limitations and risks. In their book AI Snake Oil, Professors Arvind Narayanan and Sayash Kapoor aim to cut through the noise and offer a critical lens on the AI hype cycle.

The AI Hype Cycle

Narayanan and Kapoor’s AI Snake Oil delves into the phenomenon of overhyping AI’s capabilities, which they believe has created false expectations and led to a misunderstanding of AI’s real-world impact. According to the authors, a major issue is the tendency to portray AI as an omnipotent force, with little regard for its limitations, ethical implications, or potential harms.

“The hype surrounding AI has given the public a skewed view of its capabilities,” says Narayanan. “It’s often portrayed as this magical solution to everything, when in reality, the technology is much more nuanced.”

The book examines the driving forces behind AI hype, including commercial interests, media exaggeration, and a lack of comprehensive public education on the subject. By dissecting these factors, Narayanan and Kapoor argue that it’s crucial to develop a more realistic understanding of AI to avoid the “snake oil” that has become rampant in the tech world.

A Call for Education

Rather than discouraging the use of AI, the authors advocate for better education and critical thinking about the technology. They argue that tackling AI’s hype head-on through education will help people navigate the promises and pitfalls of AI, leading to a more balanced and informed discourse.

“We’re not anti-AI,” Kapoor clarifies. “But we do want to encourage a more thoughtful, measured understanding of how AI works, what it can and cannot do, and how it impacts society. This can only happen if we address the educational gap that exists.”

The book emphasizes the importance of teaching AI literacy in schools and universities, promoting transparency among AI developers, and encouraging the media to present AI advancements more responsibly. By fostering critical engagement with AI, the researchers hope to combat the cycle of hype that leads to misinformation.

Shifting the Conversation

Narayanan and Kapoor’s work comes at a time when AI is being integrated into nearly every industry, from healthcare to finance, entertainment to education. The authors believe that the public needs to be equipped with the tools to critically evaluate AI’s role in their lives, rather than being swept up in the excitement of the latest tech trends.

“We need a more holistic approach to understanding AI,” says Narayanan. “This isn’t just about the technology—it’s about the ethical, social, and economic implications that come with it.”

As AI continues to evolve, Narayanan and Kapoor hope their book will serve as a resource for those seeking a clearer, more grounded understanding of what AI is—and isn’t. Their message is clear: rather than falling for the hype, it’s time to confront AI with education and critical thought.