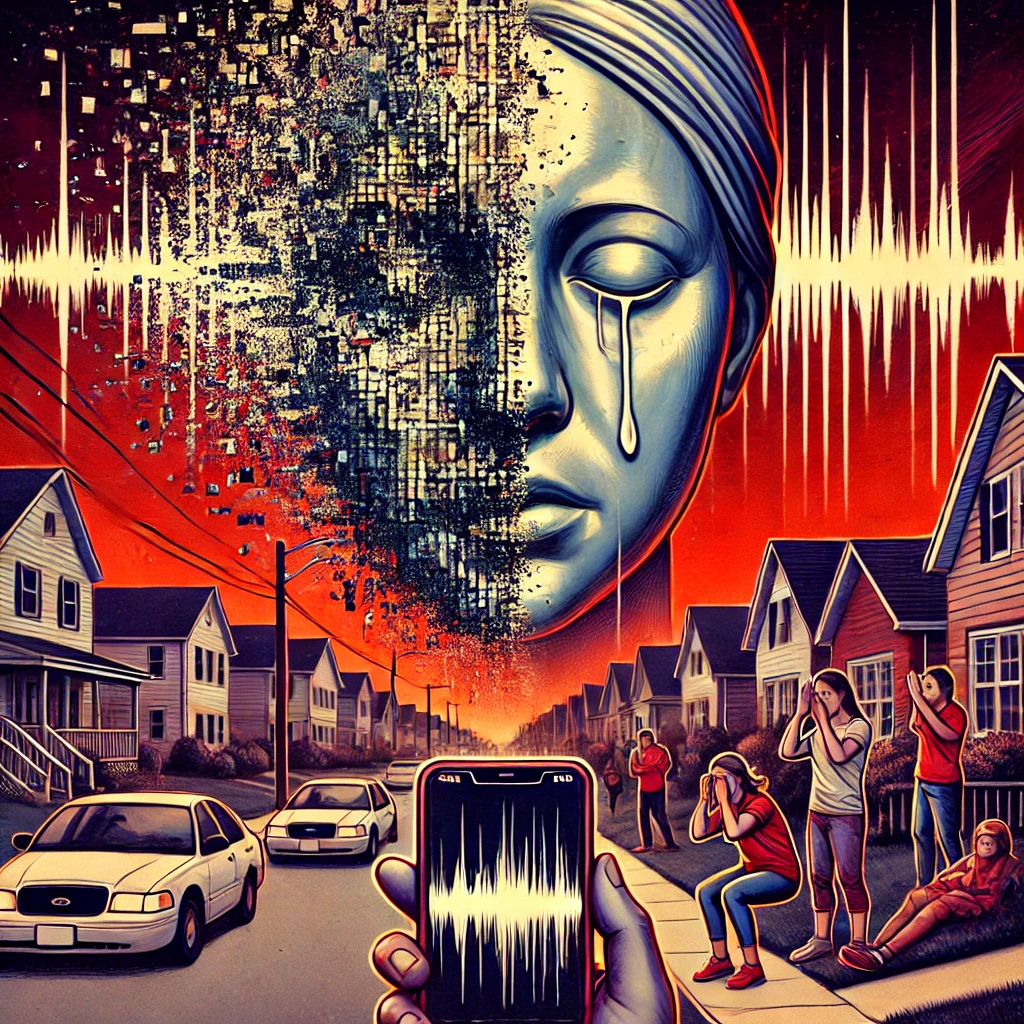

In an age where artificial intelligence (AI) can mimic human behavior with startling accuracy, a shocking incident involving a deepfake audio clip wreaked havoc in a quiet suburb outside of Baltimore. When an audio recording surfaced, allegedly featuring a local school principal making racist and derogatory comments, it quickly went viral. The controversy that followed thrust the community into a maelstrom of outrage, with death threats targeting the principal and public discourse spiraling into division. But what was perceived as an offensive, candid moment turned out to be an insidious manipulation—an AI-generated deepfake. While the truth eventually emerged, a perplexing question lingers: why do people still believe the recording is real?

The Power of Viral Outrage

The audio clip first appeared on social media, shared by an anonymous account claiming to have inside knowledge about the principal’s bigoted comments during a private conversation. Within hours, the clip had been reposted thousands of times, inflaming racial tensions and igniting heated debates. Residents, parents, and students called for the principal’s immediate dismissal, with some even resorting to threats of violence. For many, the audio was irrefutable proof of the principal’s alleged prejudice, leaving little room for debate or patience.

The principal denied making the comments, but in a climate of intense social justice awareness, many felt that denial was simply a reflexive attempt at self-preservation. The vitriolic nature of the conversation online accelerated the spread of misinformation, fanning the flames of public anger.

Exposing the Deepfake

As the controversy escalated, a small group of technologists and concerned citizens began scrutinizing the clip more closely. Using audio analysis tools and comparing the alleged voice with verified recordings of the principal, they discovered discrepancies in the tone, pacing, and timbre of the voice. The investigation revealed that the audio was not a genuine recording but a product of advanced AI deepfake technology.

Deepfake audio technology can clone voices by training on samples of a person’s speech, allowing AI to recreate speech patterns so convincingly that even those familiar with the voice can struggle to detect the forgery. In this case, the deepfake was designed to make the principal’s voice appear as though it was delivering offensive comments that had never been spoken.

While the deepfake was exposed, the damage had already been done. Trust was eroded, and emotions ran high. Despite overwhelming evidence that the audio clip was fabricated, a significant portion of the community still clung to the belief that it was real. This phenomenon, where facts are dismissed or ignored in favor of a compelling narrative, raises troubling questions about the persistence of misinformation.

Why Do People Still Believe It’s Real?

- Confirmation Bias: Many people are prone to accepting information that confirms their pre-existing beliefs. If someone already believed the principal harbored racist views, the deepfake audio served as the “proof” they needed to reinforce that belief. Even when confronted with evidence that the clip was fake, their perception remained anchored in that initial confirmation.

- Emotional Impact: The emotional nature of the content played a crucial role in shaping opinions. The racist comments in the clip evoked strong emotional reactions, leading people to form opinions before fully processing the details. Once an emotional connection to a narrative is made, it becomes difficult to reverse, even when contrary facts emerge.

- Distrust in Authority: Widespread distrust in authority figures, institutions, and the media exacerbated the situation. For many, the explanation that the audio was a fake generated by AI felt like an attempt by powerful entities to cover up wrongdoing. In an era where trust in official narratives is fragile, alternative explanations—no matter how far-fetched—can seem more believable.

- Technological Skepticism: Ironically, while AI deepfakes are highly advanced, many people still find it hard to believe that such convincing fabrications are possible. Skepticism around the capabilities of AI led some to dismiss the idea that the audio could have been generated artificially. This lack of understanding about the technology behind deepfakes left the door open for doubt.

- Social Media Dynamics: Social media platforms played a crucial role in amplifying the clip and keeping the controversy alive. Algorithms prioritize content that drives engagement, and polarizing material like the audio clip attracted significant attention. As a result, even after the deepfake was debunked, the viral nature of the content meant it continued to circulate, reaching new audiences who were unaware of the full context.

The Broader Implications

This incident highlights a disturbing trend in the way deepfake technology can be weaponized to undermine trust and sow division. As the technology becomes more sophisticated, the potential for misuse grows, posing significant risks to individuals, institutions, and even democracy itself.

While AI holds incredible promise in various fields, the dark side of its potential is becoming more apparent. Deepfakes—once dismissed as a niche concern—are now a serious societal threat, capable of ruining reputations, destabilizing communities, and fueling misinformation campaigns. As this technology becomes more accessible, so too must efforts to educate the public about its dangers and develop tools for detecting and combating its misuse.

Lessons Learned

The racist AI deepfake that fooled and divided this Baltimore-area community serves as a cautionary tale. It underscores the importance of critical thinking in the digital age, where not everything that sounds or looks real can be trusted. Communities need to develop resilience against misinformation, recognizing the power of technology to deceive and the responsibility each of us has to verify information before passing judgment.

In the aftermath, the principal was eventually cleared of any wrongdoing, but the scars left by the deepfake remain. For some, the truth was enough to heal the wounds; for others, the narrative that initially resonated was too compelling to abandon. This is the paradox of living in a world where technology can manipulate reality with such precision that even the truth can struggle to catch up.

The future demands a balance between leveraging the benefits of AI while safeguarding against its capacity to distort and divide. For this community, that balance came at a high price, but it offers a stark lesson for the rest of society. The next deepfake could be even more convincing—and the consequences, even more devastating.